8 Challenges Developers Face in AI Projects (And How to Solve Them)

Riten Debnath

11 Oct, 2025

In 2026, Artificial Intelligence has become central to transformation across industries — from healthcare and finance to retail and manufacturing. Despite the enthusiasm, many AI projects fail not due to lack of technology but because developers face complex, real-world challenges that go beyond scripting models.

If you're a developer, freelancer, or professional diving into AI, knowing these challenges upfront and how to tackle them will save you time, resources, and headaches. It also helps you build stronger portfolios that demonstrate not only technical skills but resilience and problem-solving.

I’m Riten, founder of Fueler, a platform that helps freelancers and professionals get hired through their work samples. In this article, I will walk you through the eight most significant challenges AI developers face in 2026 and provide clear, actionable strategies to overcome each one. Your ability to address these problems defines your success in AI projects and your professional credibility.

1. Data Quality and Quantity: The Backbone of AI Success

AI models thrive on data, but the reality is messy. Data collected from real-world sources is often incomplete, noisy, inconsistent, or biased. Without quality data, even the best algorithms flounder.

Challenges:

- Missing or sparse data points cause weak model training.

- Data labeling is time-consuming and costly yet crucial for supervised learning.

- Hidden biases in data lead to unfair or inaccurate AI outcomes.

- Inconsistent data from multiple sources complicates preprocessing.

How to Solve It:

- Use tools like Labelbox and Supervisely for semi-automated, scalable, and accurate labeling workflows.

- Employ data augmentation techniques to artificially expand datasets, especially in fields like image recognition.

- Audit datasets regularly for bias using fairness toolkits such as IBM Fairness 360.

- Standardize and normalize data early using preprocessing pipelines with Python libraries like Pandas and Scikit-learn.

Why it matters: Quality data is the foundation of every effective AI project. No matter how advanced your model is, poor data will yield poor results. Ensuring clean, sufficient, and unbiased data upfront maximizes model accuracy and trustworthiness.

2. Selecting the Right Model and Avoiding Overfitting

Choosing the right algorithm is critical but complicated by the dizzying number of options in 2026, from simple linear regression to complex transformers.

Challenges:

- Overfitting means a model performs great on training data but poorly in reality.

- Underfitting leads to oversimplified models missing key patterns.

- Balancing model complexity and interpretability is often overlooked.

How to Solve It:

- Start simple; experiment with classical models like decision trees or logistic regression before exploring deep learning.

- Use cross-validation and regularization techniques such as dropout or L1/L2 penalties to combat overfitting.

- Leverage AutoML tools like Google Cloud AutoML or H2O Driverless AI to automate model tuning and selection.

- Utilize visualization tools like TensorBoard or SHAP to interpret model behavior and improve transparency.

Why it matters: Picking and tuning the right model lays the groundwork for robust, generalizable AI that works well on new, unseen data. Avoiding overfitting saves costly retraining and rebuilds later.

3. Infrastructure Complexity and Scalability

Deploying AI at scale requires a resilient infrastructure that can handle large volumes of data and traffic without failure or massive latency.

Challenges:

- Building infrastructure for model training and real-time inference can overwhelm small teams.

- Scaling from prototype to production while maintaining performance is hard.

- Managing computer costs, especially with resource-heavy deep learning, is tricky.

How to Solve It:

- Use cloud platforms like AWS SageMaker, Google AI Platform, or Azure ML Studio, which handle scaling and infrastructure management.

- Containerize models with Docker and orchestrate with Kubernetes for flexibility and easy deployment.

- Optimize models for inference by converting them to lightweight formats like ONNX or using quantization techniques.

- Plan capacity based on traffic predictions, and leverage serverless architectures where possible.

Why it matters: Without scalable, reliable infrastructure, AI models can fail spectacularly in production. Good infrastructure design ensures your AI delivers consistent value under real-world loads.

4. Lack of Explainability and Trust

Many AI models, particularly deep learning ones, operate like black boxes where the decision process isn’t transparent, which undermines user trust and regulatory approval.

Challenges:

- Stakeholders demand explanations of how predictions are made.

- Compliance with regulations like GDPR requires explainability.

- Limited interpretability can hide biases or errors.

How to Solve It:

- Incorporate interpretability libraries like LIME and SHAP to explain individual model predictions.

- Use inherently interpretable models such as decision trees or rule-based systems where possible.

- Document model design, training data, and evaluation metrics clearly.

- Regularly test models for bias and fairness using toolkits like Fairlearn.

Why it matters: Explainability builds trust among users, clients, and regulators. In 2026, transparency is a core requirement for ethical and successful AI deployment.

5. Integration Challenges with Existing Systems

Integrating AI into legacy infrastructure or new applications is complex—models must work smoothly with databases, user interfaces, and business processes.

Challenges:

- Data silos hamper training and updating models.

- Real-time inference requires low-latency integration with applications.

- Infrastructure heterogeneity complicates connection between AI services and existing software stacks.

How to Solve It:

- Build modular APIs using frameworks like FastAPI or Flask to expose AI models for easy consumption.

- Use message brokers like RabbitMQ or Kafka for asynchronous communication between services.

- Collaborate closely with DevOps and engineering teams early to align infrastructure requirements.

- Design deployment pipelines that support frequent updating and versioning without downtime.

Why it matters: Seamless integration is necessary for AI to add real business value, enhance user experience, and maintain operational efficiency.

6. Data Privacy and Security Concerns

AI projects often handle sensitive data, making privacy and security paramount to prevent breaches or misuse, and many teams now rely on synthetic data to work with realistic yet privacy-safe datasets. Private AI solutions ensure that personally identifiable information (PII) remains protected throughout data collection, model training, and deployment.

Challenges:

- Ensuring personally identifiable information (PII) protection.

- Avoiding data leaks during model training and deployment.

- Complying with laws like GDPR, HIPAA, and emerging privacy regulations.

How to Solve It:

- Implement encryption for data at rest and in transit with standards such as TLS and AES.

- Use federated learning or differential privacy approaches to enable training without centralized sensitive data.

- Conduct regular security audits and vulnerability assessments on AI infrastructure.

- Establish clear access controls and audit trails.

Why it matters: Privacy violations carry heavy fines and damage reputations. Responsible AI deployment protects both users and organizations.

7. Continuous Model Maintenance and Drift

AI models degrade over time as real-world data changes, a problem known as model drift, leading to inaccurate predictions.

Challenges:

- Identifying when a model needs retraining or replacement.

- Building pipelines for continuous data collection and retraining.

- Managing multiple model versions simultaneously.

How to Solve It:

- Deploy monitoring tools like Evidently AI or Arize AI to detect data and concept drift automatically.

- Automate retraining pipelines using workflow tools such as Apache Airflow or Kubeflow Pipelines.

- Use feature stores to maintain consistent, well-governed input data across training and inference.

- Regularly validate models with fresh test data to ensure ongoing accuracy.

Why it matters: Without continuous maintenance, AI solutions quickly become obsolete. Proactive drift management keeps performance and value high.

8. Talent Shortage and Team Collaboration Issues

Building and deploying AI projects requires cross-functional teams, yet talent shortages and collaboration gaps remain a major bottleneck.

Challenges:

- Difficulty finding developers skilled in AI, data engineering, and DevOps simultaneously.

- Communication gaps between data scientists, developers, and business stakeholders.

- Managing workflow and accountability across diverse teams.

How to Solve It:

- Invest in upskilling existing teams with courses from platforms like Coursera or Udacity focused on MLOps and AI engineering.

- Employ collaboration tools like Jira, Confluence and GitHub Projects to streamline transparency and communication.

- Use clear documentation and shared repositories to bridge knowledge gaps.

- Encourage a culture of shared responsibility, promoting collaboration between domain experts and technical teams.

Why it matters: Strong teams enable faster, more reliable AI delivery. Addressing talent and collaboration challenges is critical to scaling AI initiatives successfully.

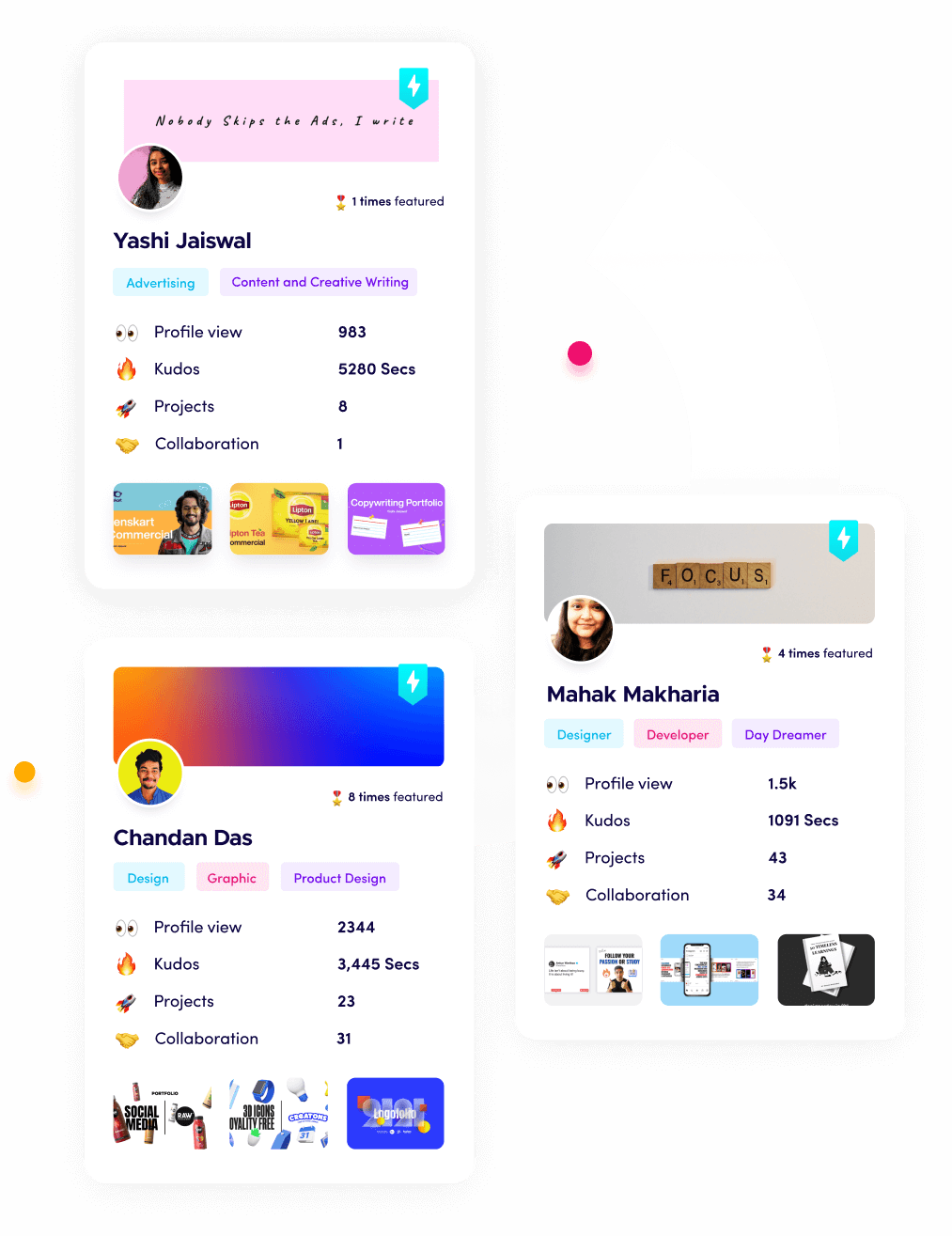

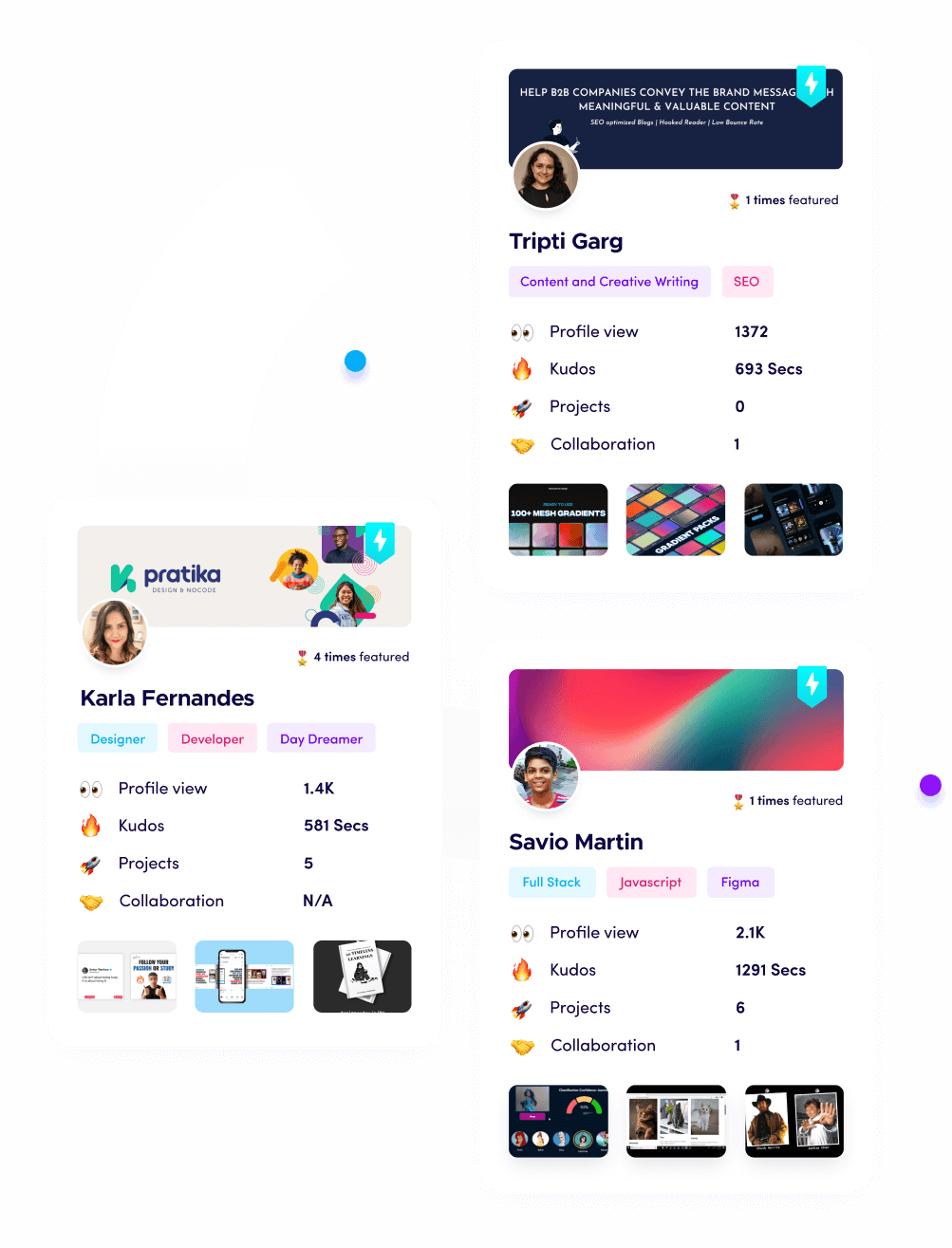

How Fueler Helps You Showcase AI Problem-Solving Expertise

In 2026, simply understanding these challenges is not enough. Hiring managers want to see how you’ve solved them on real projects. Fueler allows you to build a professional portfolio featuring your AI projects including code, deployment strategies, problem-solving narratives, and outcomes. By clearly documenting how you navigated common AI challenges, you demonstrate credibility, experience, and the leadership that employers value.

Your portfolio on Fueler becomes more than just a presentation; it is your proof of skill and your bridge to new opportunities in the competitive AI landscape.

Final Thoughts

AI projects come with unique, evolving challenges, data issues, model selection dilemmas, infrastructure scale, explainability, integration, privacy, drift, and talent gaps. Each challenge is an opportunity to prove your expertise and build more reliable, ethical, and impactful AI systems.

As AI adoption grows, developers who master both the technical solutions and how to communicate these efforts professionally will lead the next wave of innovation.

FAQs

1. What are common AI challenges developers face in 2026?

Key challenges include data quality, model explainability, infrastructure complexity, security, and managing model drift.

2. How can I improve data quality for AI projects?

Use data labeling tools, augmentation, bias audits, and maintain stringent preprocessing pipelines.

3. Which tools help automate AI deployment and monitoring?

Popular tools are TensorFlow Serving, Kubeflow, MLflow, Evidently AI, AWS SageMaker, and Kubernetes.

4. How to handle AI model explainability?

Use interpretability libraries like SHAP and LIME and document model processes transparently.

5. How important is collaboration in AI project success?

It is vital. AI projects require multidisciplinary teamwork supported by clear communication and documentation.

What is Fueler Portfolio?

Fueler is a career portfolio platform that helps companies find the best talent for their organization based on their proof of work. You can create your portfolio on Fueler, thousands of freelancers around the world use Fueler to create their professional-looking portfolios and become financially independent. Discover inspiration for your portfolio

Sign up for free on Fueler or get in touch to learn more.